|

|

@@ -1,87 +0,0 @@

|

|

|

----

|

|

|

-title: "Scheduling"

|

|

|

-date: 2019-03-13T18:28:09-07:00

|

|

|

-draft: false

|

|

|

-weight: 4

|

|

|

----

|

|

|

-

|

|

|

-Scheduling of queries or jobs (e.g. run this SQL query everyday at 5am) is currently done via Apache Oozie and will be open to other schedulers with [HUE-3797](https://issues.cloudera.org/browse/HUE-3797). [Apache Oozie](http://oozie.apache.org) is a very robust scheduler for Data Warehouses.

|

|

|

-

|

|

|

-## Editor

|

|

|

-

|

|

|

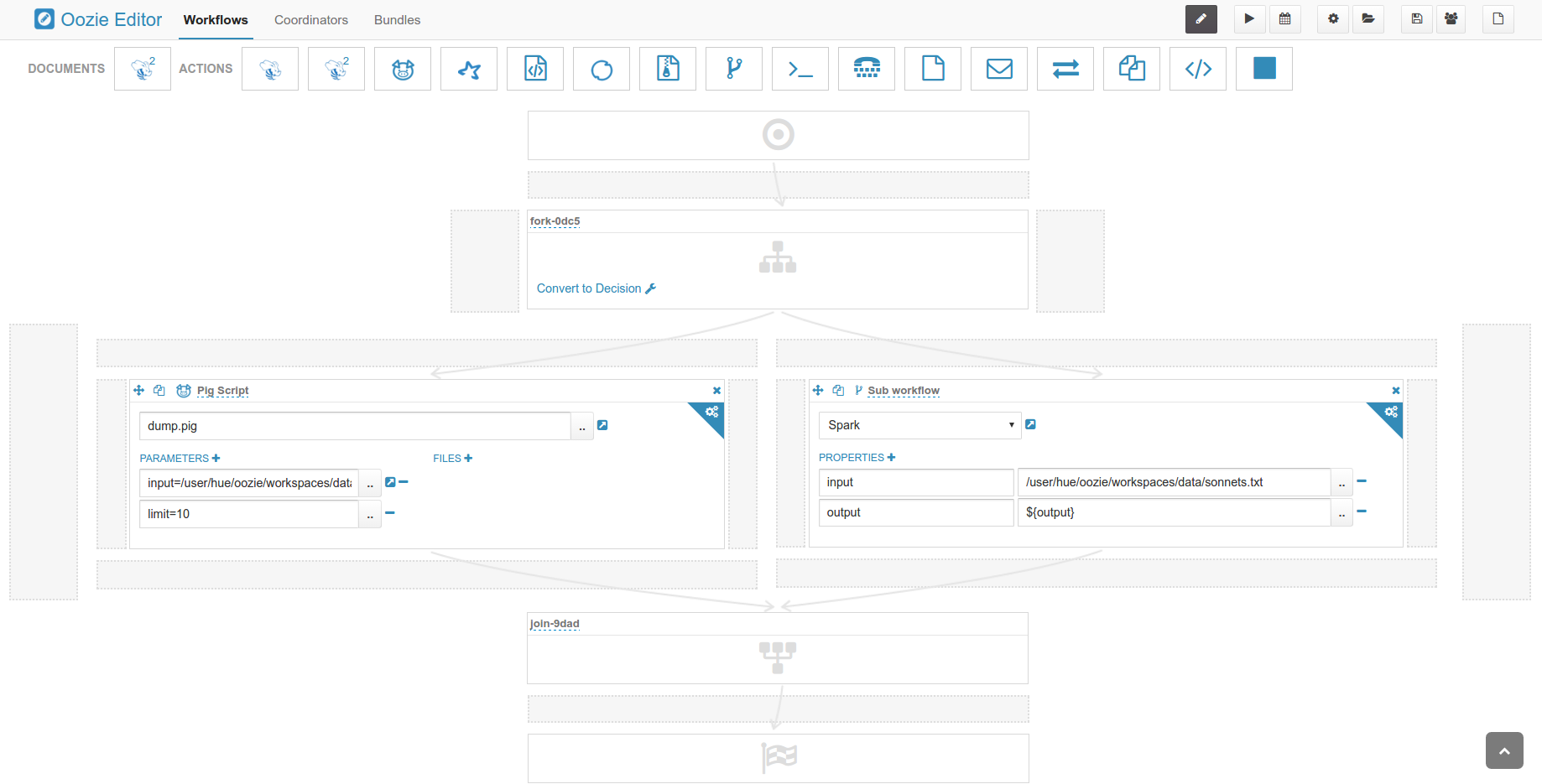

-Workflows can be built by pointing to query scripts on the file systems or just selecting one of your saved queries. A workflow can then be scheduled to run regularly via a schedule.

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

-Many users leverage the workflow editor to get the Oozie XML configuration of their workflows.

|

|

|

-

|

|

|

-### Tutorial

|

|

|

-

|

|

|

-How to run Spark jobs with Spark on YARN? This often requires trial and error in order to make it work.

|

|

|

-

|

|

|

-Hue is leveraging Apache Oozie to submit the jobs. It focuses on the yarn-client mode, as Oozie is already running the spark-summit command in a MapReduce2 task in the cluster. You can read more about the Spark modes here.

|

|

|

-

|

|

|

-Here is how to get started successfully:

|

|

|

-

|

|

|

-#### PySpark

|

|

|

-

|

|

|

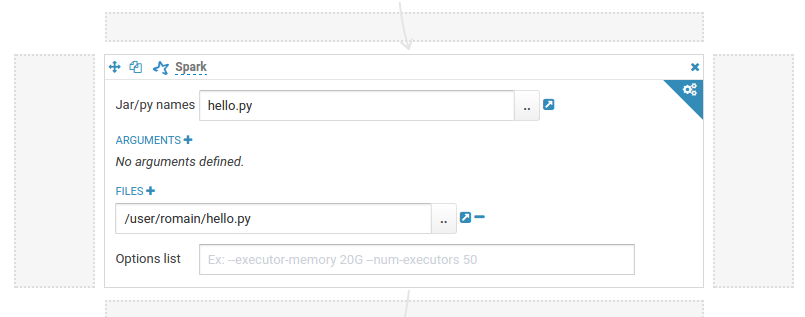

-Simple script with no dependency.

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

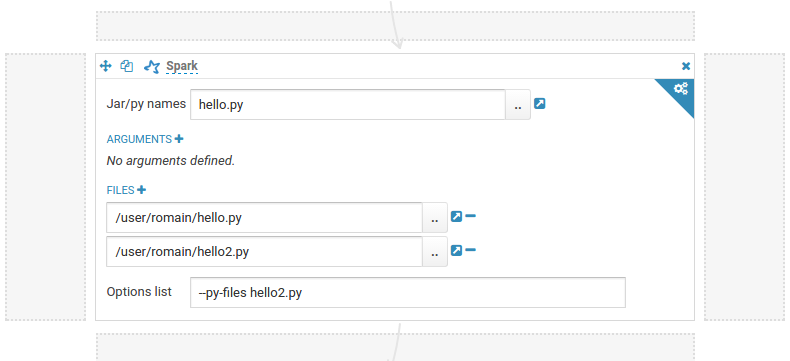

-Script with a dependency on another script (e.g. hello imports hello2).

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

-For more complex dependencies, like Panda, have a look at this documentation.

|

|

|

-

|

|

|

-

|

|

|

-#### Jars (Java or Scala)

|

|

|

-

|

|

|

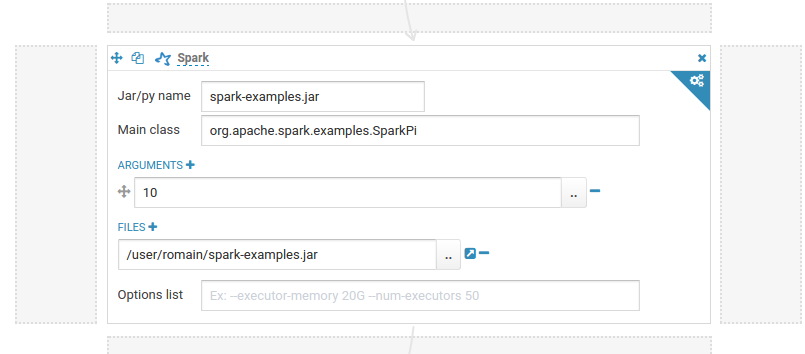

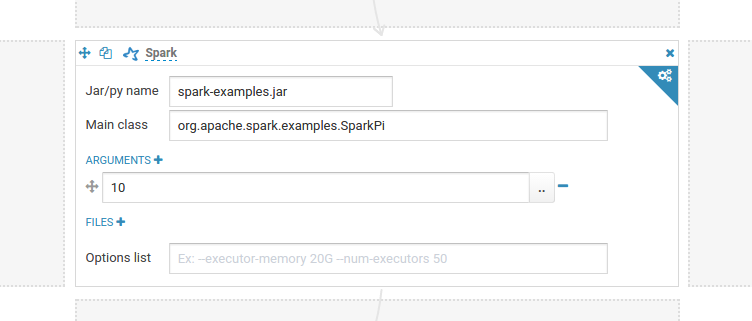

-Add the jars as File dependency and specify the name of the main jar:

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

-Another solution is to put your jars in the ‘lib’ directory in the workspace (‘Folder’ icon on the top right of the editor).

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

-### Shell

|

|

|

-

|

|

|

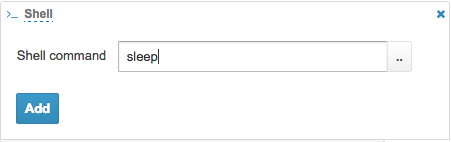

-If the executable is a standard Unix command, you can directly enter it in the `Shell Command` field and click Add button.

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

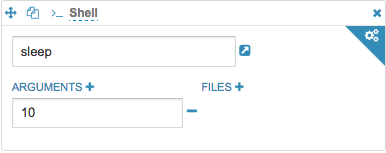

-Arguments to the command can be added by clicking the `Arguments+` button.

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

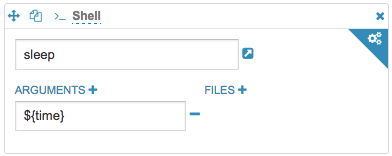

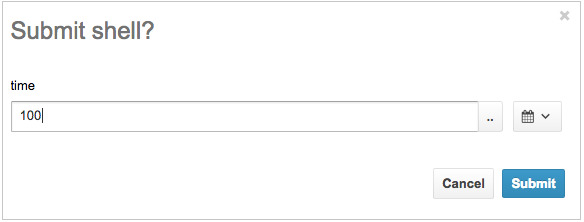

-`${VARIABLE}` syntax will allow you to dynamically enter the value via Submit popup.

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

-If the executable is a script instead of a standard UNIX command, it needs to be copied to HDFS and the path can be specified by using the File Chooser in Files+ field.

|

|

|

-

|

|

|

- #!/usr/bin/env bash

|

|

|

- sleep

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

-Additional Shell-action properties can be set by clicking the settings button at the top right corner.

|

|

|

-

|

|

|

-## Browser

|

|

|

-

|

|

|

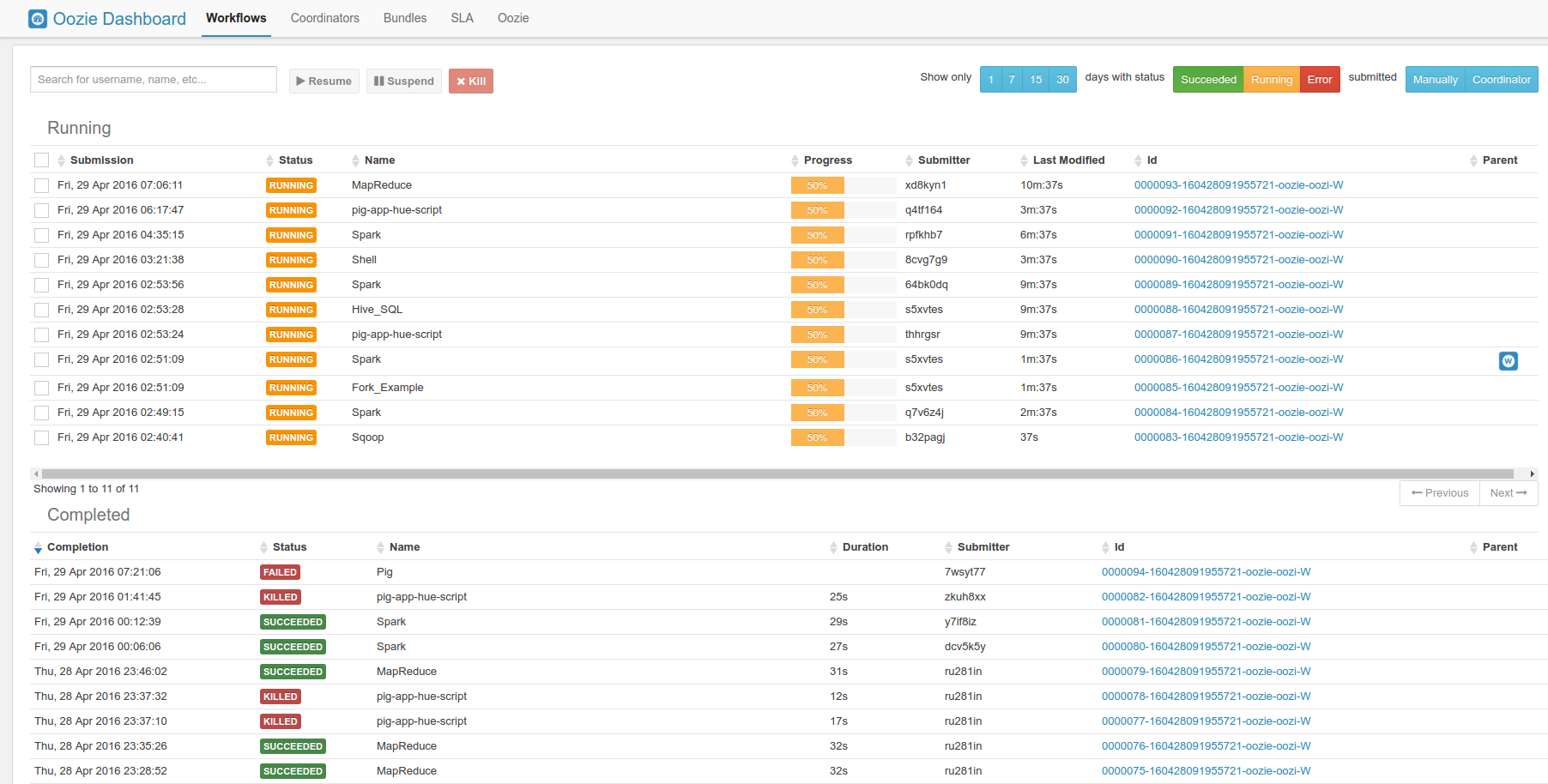

-Submitted workflows, schedules and bundles can be managed directly via an interface:

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

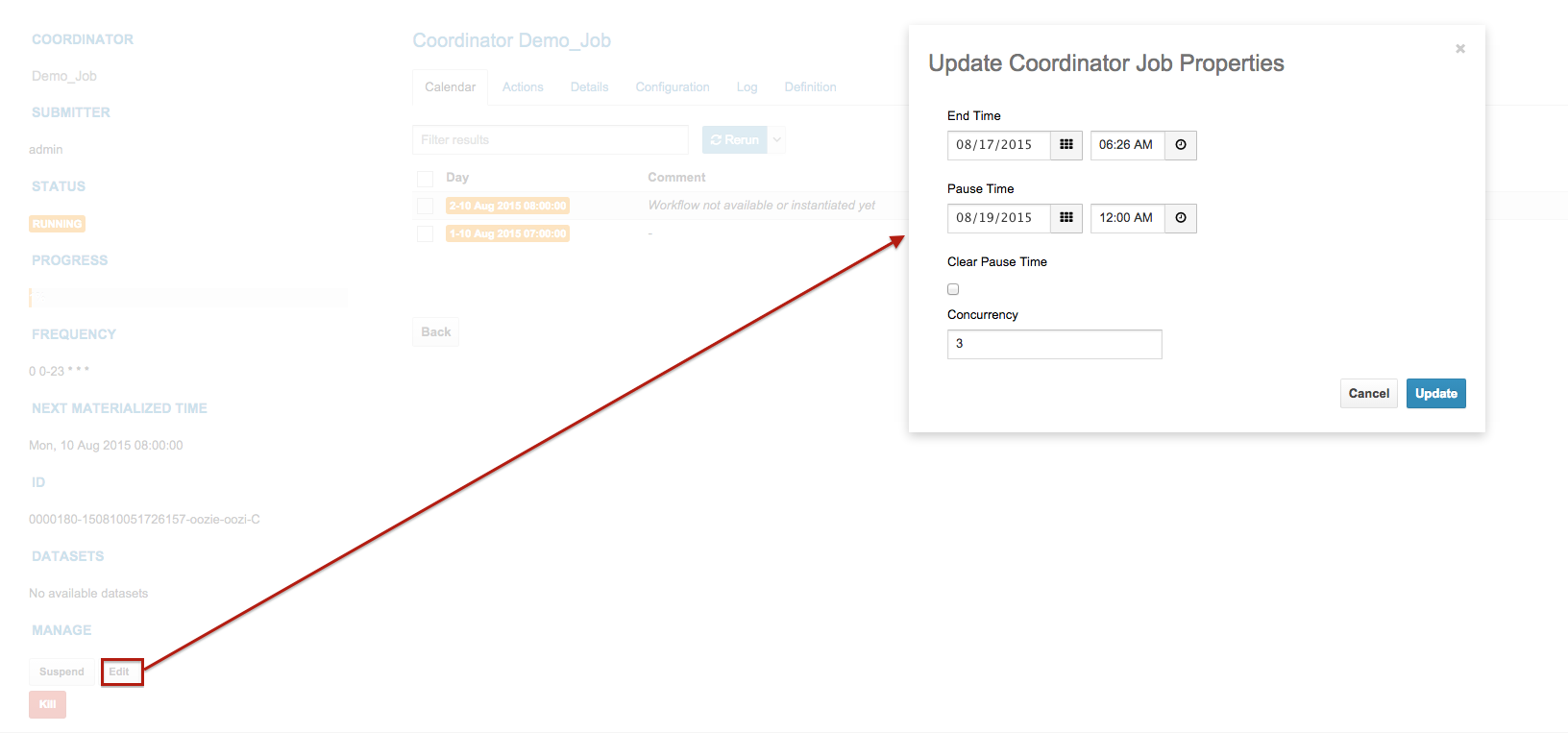

-### Extra Coordinator actions

|

|

|

-

|

|

|

-Update Concurrency and PauseTime of running Coordinator.

|

|

|

-

|

|

|

-

|

|

|

-

|

|

|

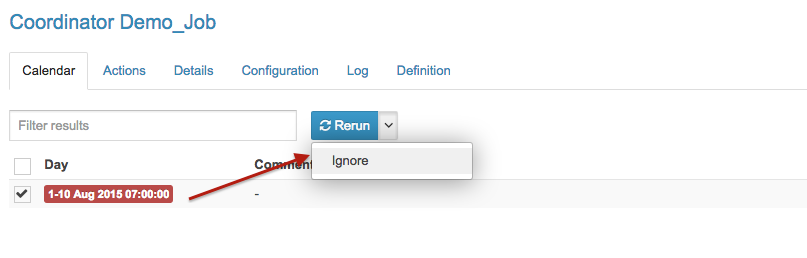

-Ignore a terminated Coordinator action.

|

|

|

-

|

|

|

-

|