**Sub-queries, correlated or not**

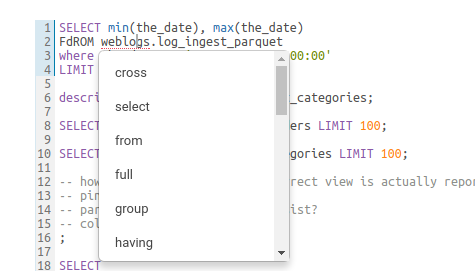

When editing subqueries it will only make suggestions within the scope of the subquery. For correlated subqueries the outside tables are also taken into account.

**Context popup**

Right click on any fragement of the queries (e.g. a table name) to gets all its metadata information. This is a handy shortcut to get more description or check what types of values are contained in the table or columns.

It’s quite handy to be able to look at column samples while writing a query to see what type of values you can expect. Hue now has the ability to perform some operations on the sample data, you can now view distinct values as well as min and max values. Expect to see more operations in coming releases.

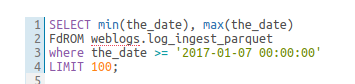

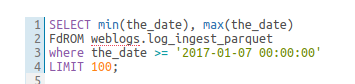

**Syntax checker**

A little red underline will display the incorrect syntax so that the query can be fixed before submitting. A right click offers suggestions.

**Advanced Settings**

The live autocompletion is fine-tuned for a better experience advanced settings an be accessed via `CTRL +` , (or on Mac `CMD + ,`) or clicking on the '?' icon.

The autocompleter talks to the backend to get data for tables and databases etc and caches it to keep it quick. Clicking on the refresh icon in the left assist will clear the cache. This can be useful if a new table was created outside of Hue and is not yet showing-up (Hue will regularly clear his cache to automatically pick-up metadata changes done outside of Hue).

### Sharing

Any query can be shared with permissions, as detailed in the [concepts](/user/concepts).

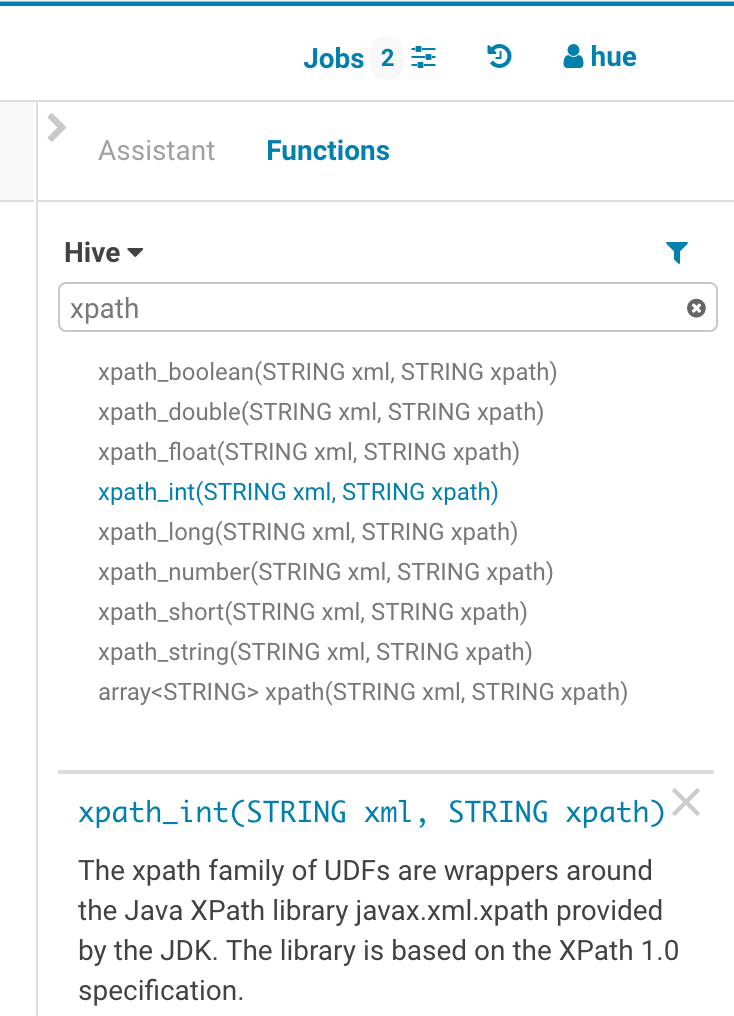

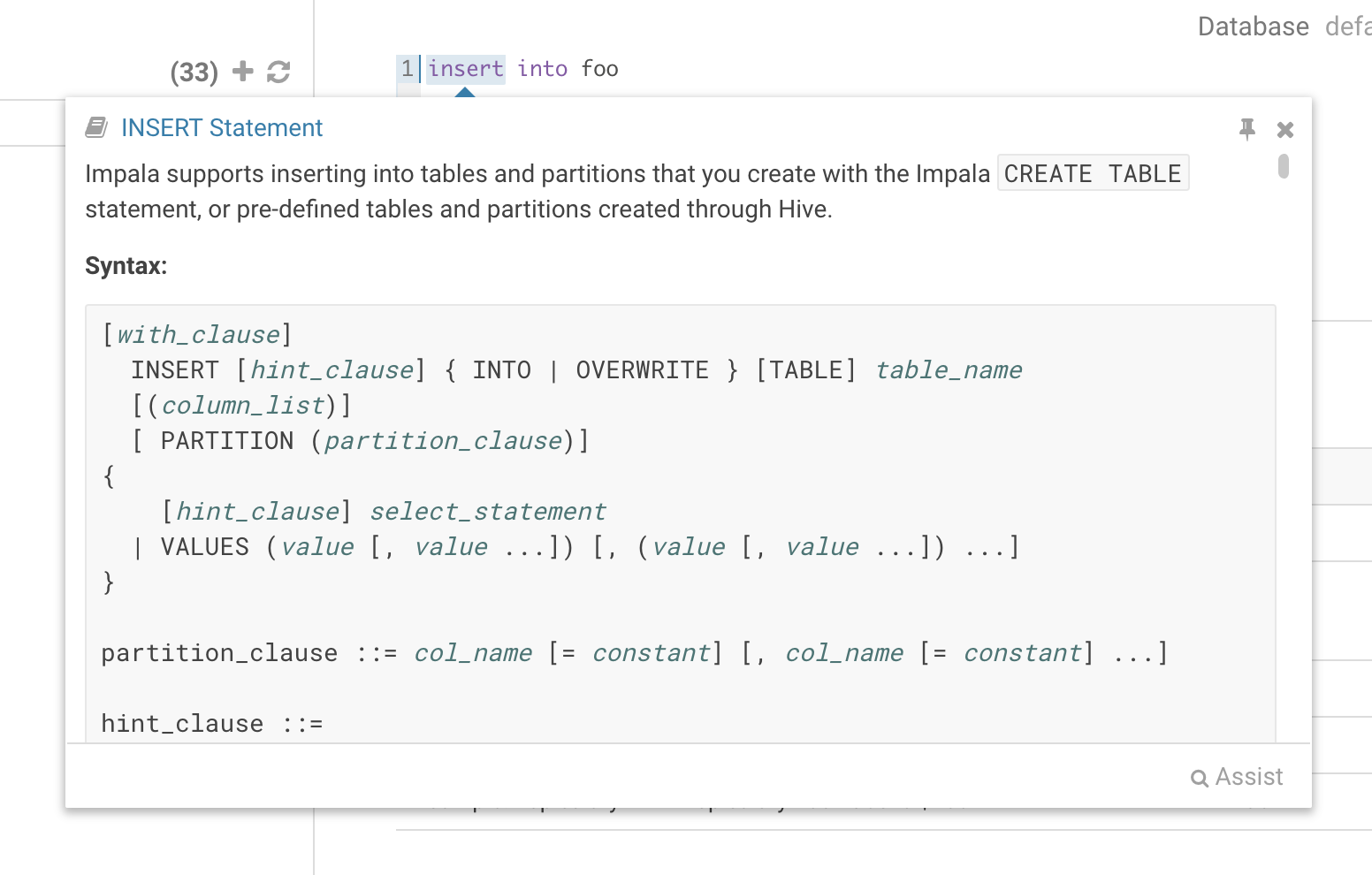

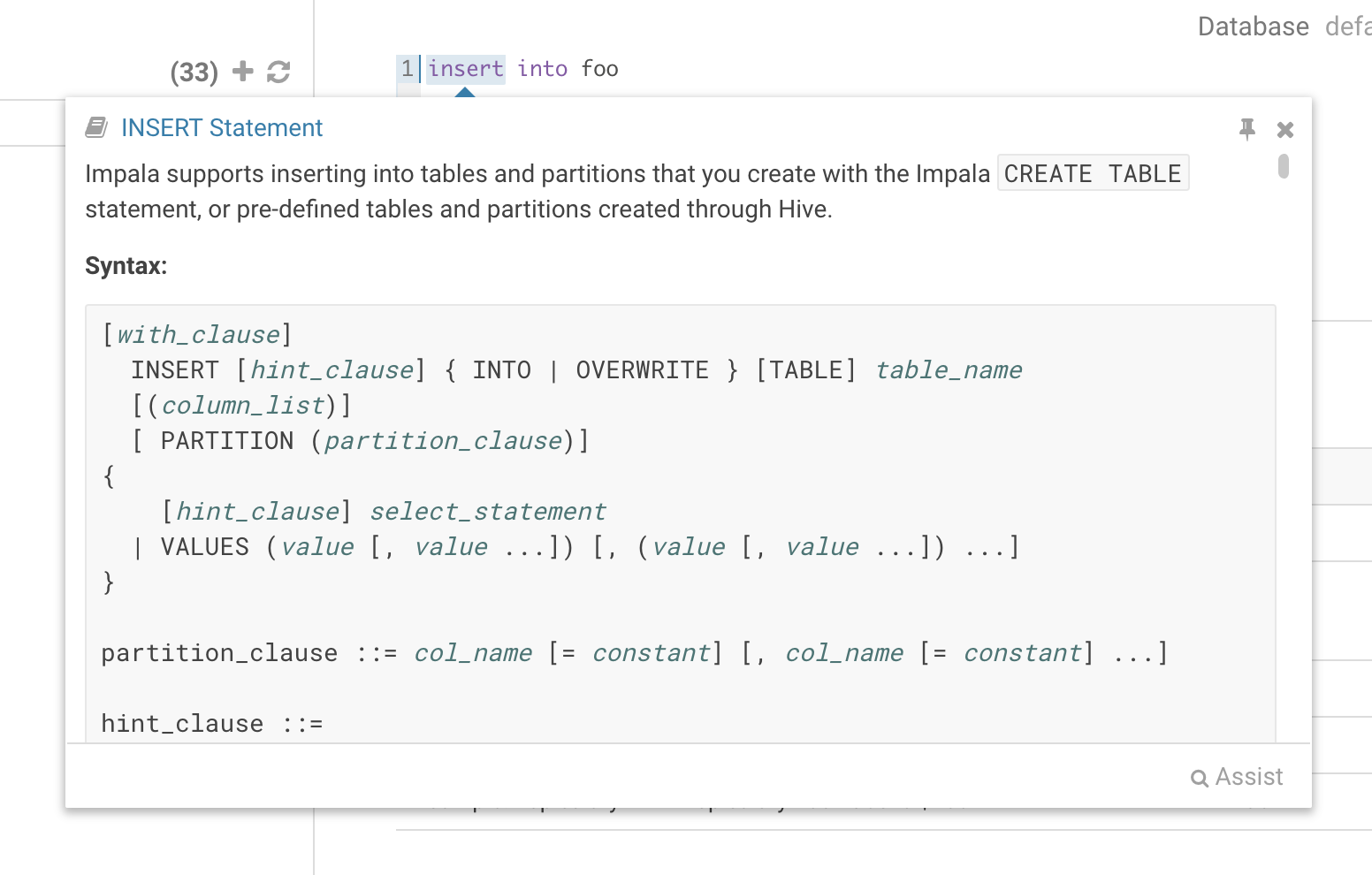

### Language reference

You can find the Language Reference in the right assist panel. The right panel itself has a new look with icons on the left hand side and can be minimised by clicking the active icon.

The filter input on top will only filter on the topic titles in this initial version. Below is an example on how to find documentation about joins in select statements.

While editing a statement there’s a quicker way to find the language reference for the current statement type, just right-click the first word and the reference appears in a popover below:

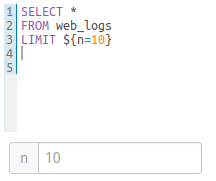

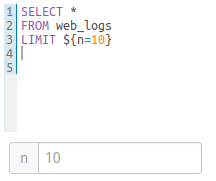

### Variables

Variables are used to easily configure parameters in a query. They are ideal for saving reports that can be shared or executed repetitively:

**Single Valued**

select * from web_logs where country_code = "${country_code}"

**The variable can have a default value**

select * from web_logs where country_code = "${country_code=US}"

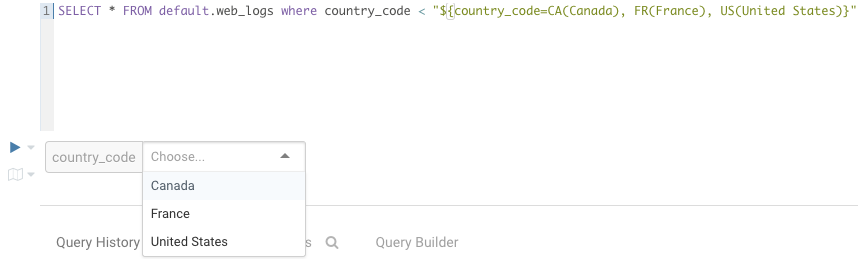

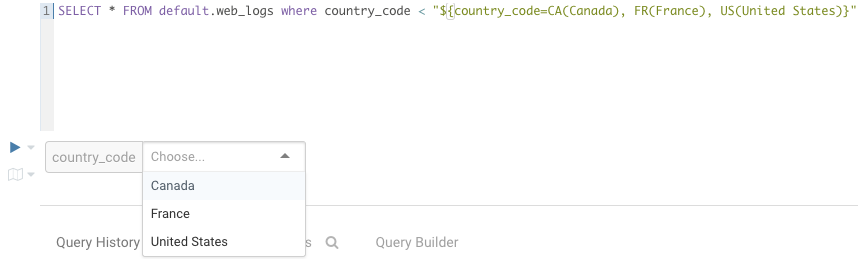

**Multi Valued**

select * from web_logs where country_code = "${country_code=CA, FR, US}"

**In addition, the displayed text for multi valued variables can be changed**

select * from web_logs where country_code = "${country_code=CA(Canada), FR(France), US(United States)}"

**For values that are not textual, omit the quotes.**

select * from boolean_table where boolean_column = ${boolean_column}

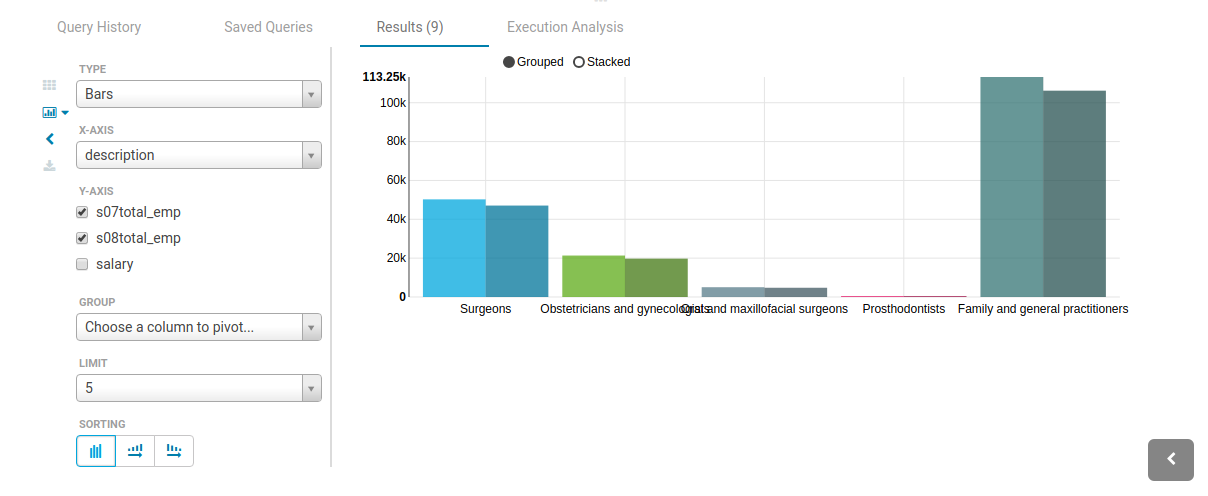

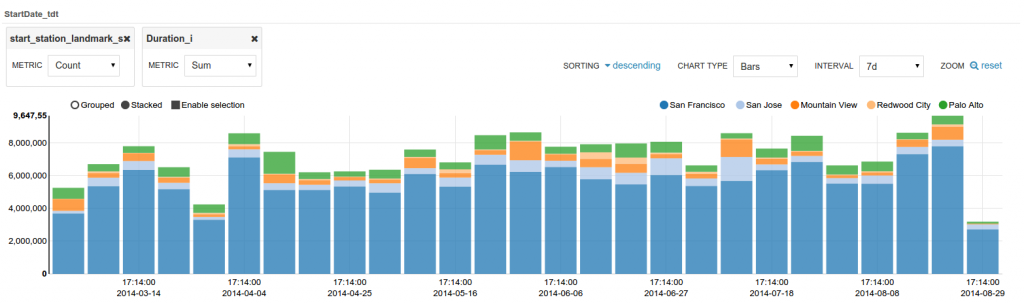

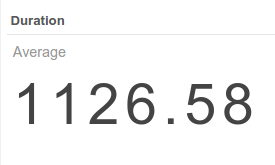

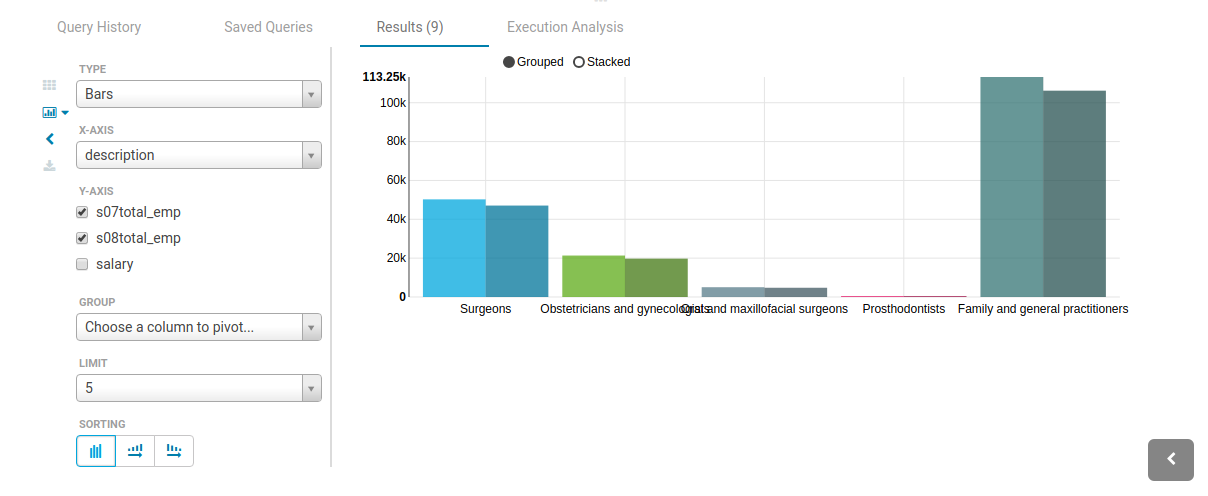

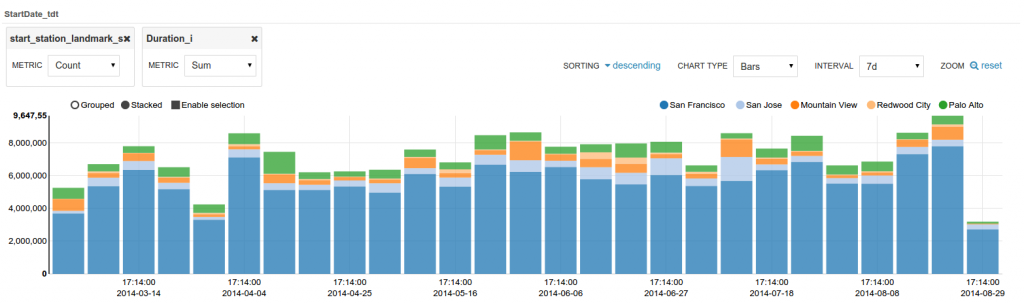

### Charting

These visualizations are convenient for plotting chronological data or when subsets of rows have the same attribute: they will be stacked together.

* Pie

* Bar/Line with pivot

* Timeline

* Scattered plot

* Maps (Marker and Gradient)

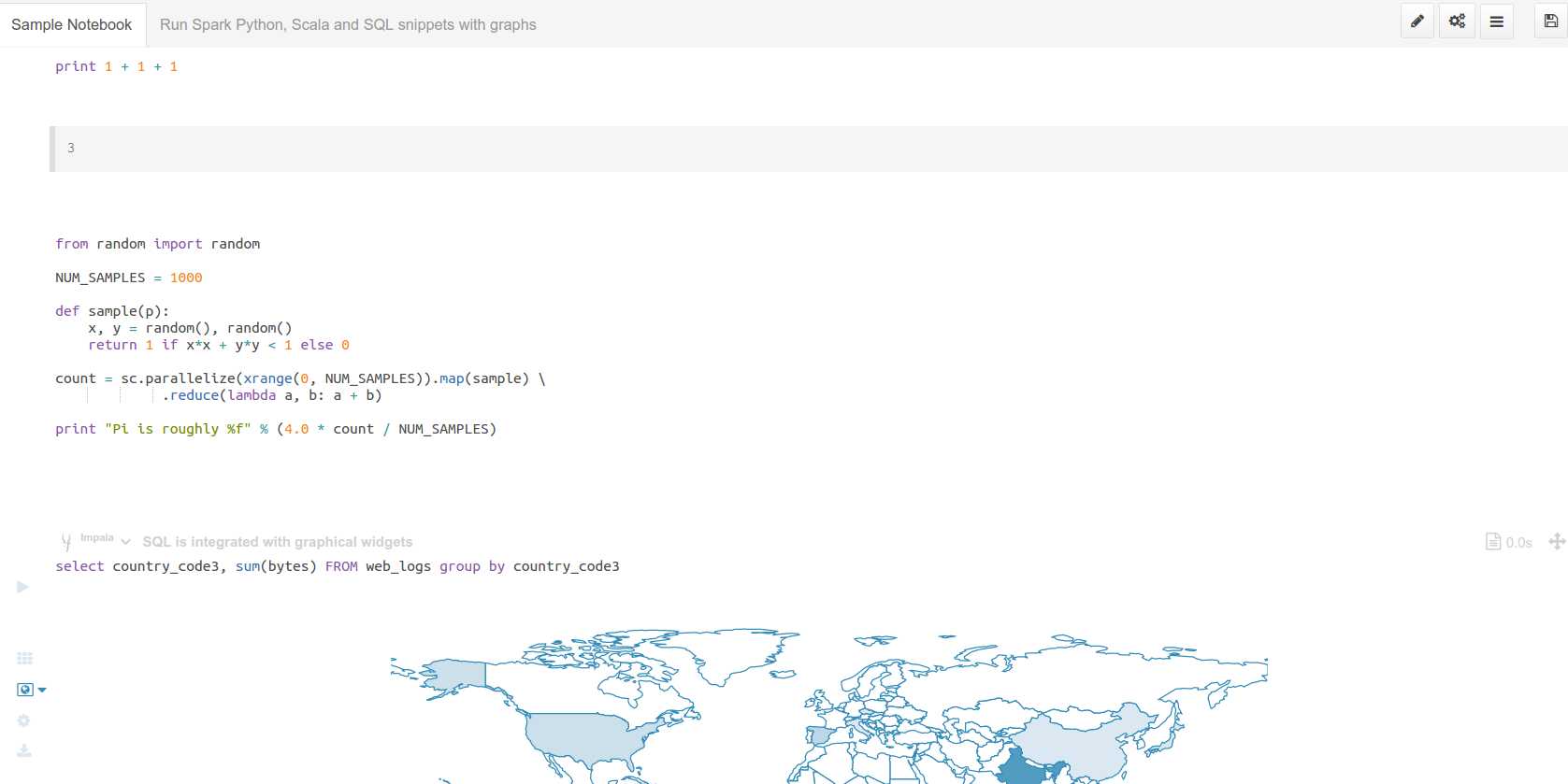

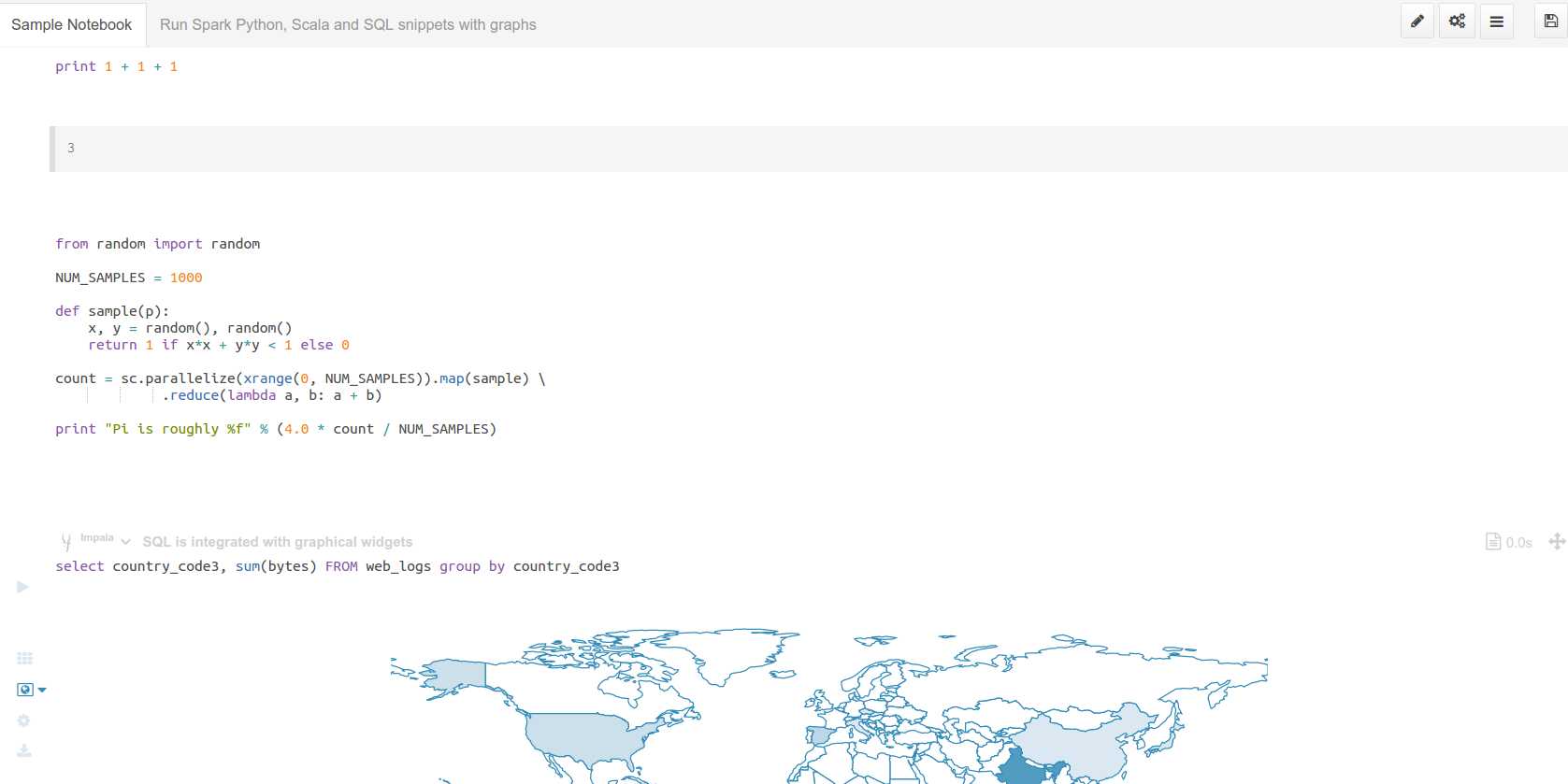

### Notebook mode

Snippets of different dialects can be added into a single page:

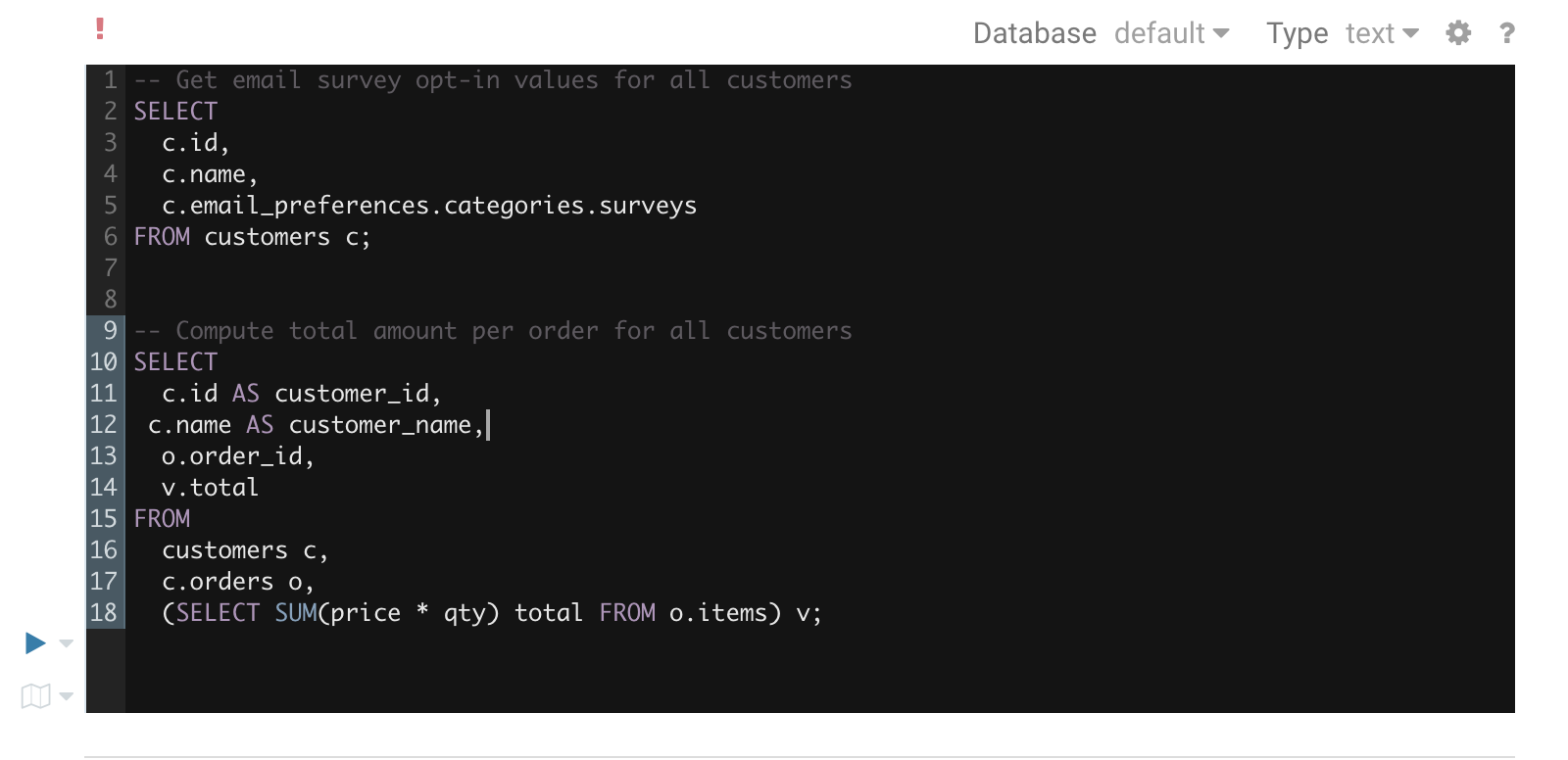

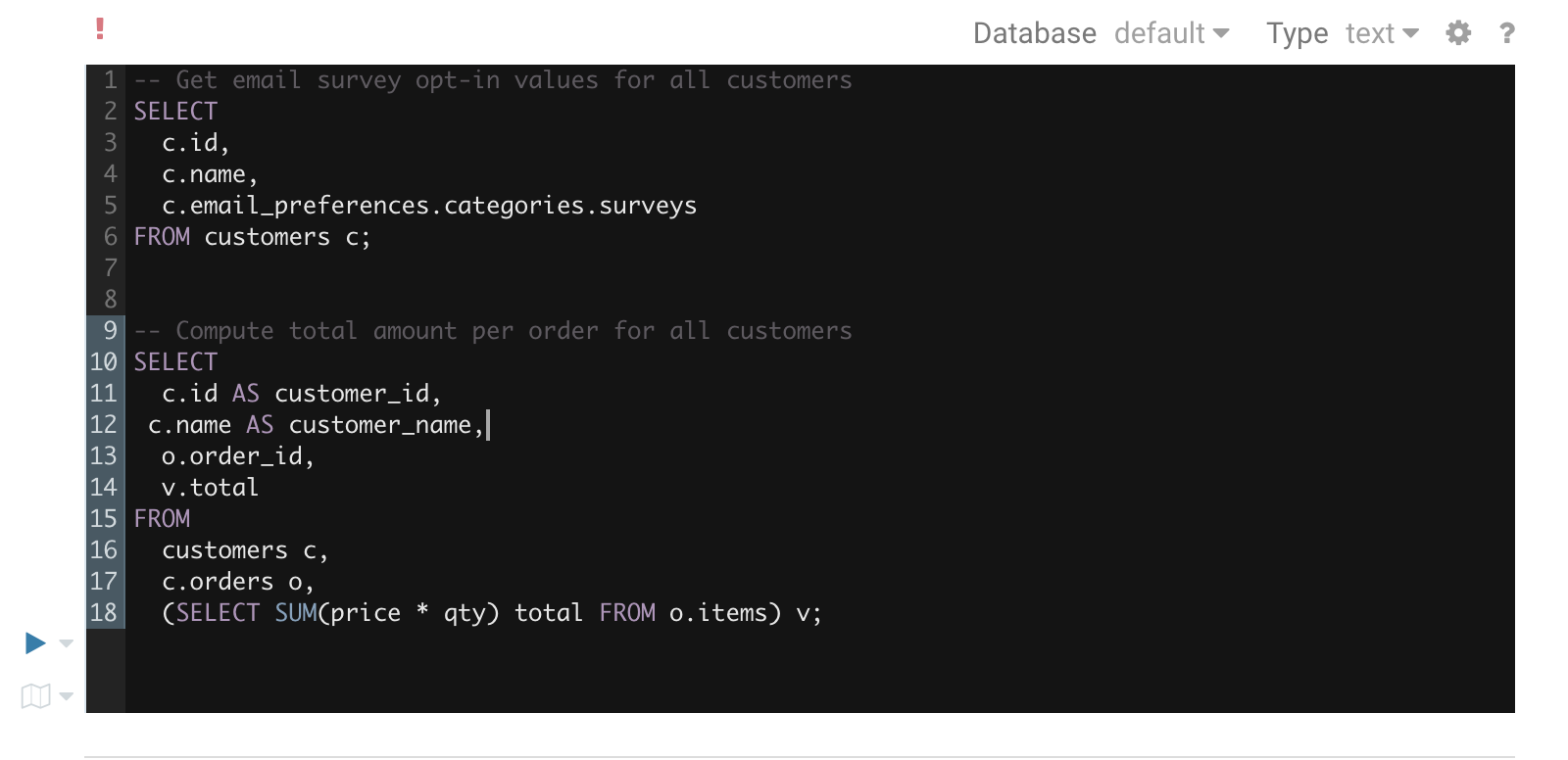

### Dark mode

Initially this mode is limited to the actual editor area and we’re considering extending this to cover all of Hue.

To toggle the dark mode you can either press `Ctrl-Alt-T` or `Command-Option-T` on Mac while the editor has focus. Alternatively you can control this through the settings menu which is shown by pressing `Ctrl-`, or `Command-`, on Mac.

### Query troubleshooting

#### Pre-query execution

**Popular values**

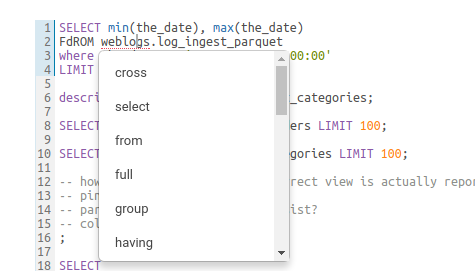

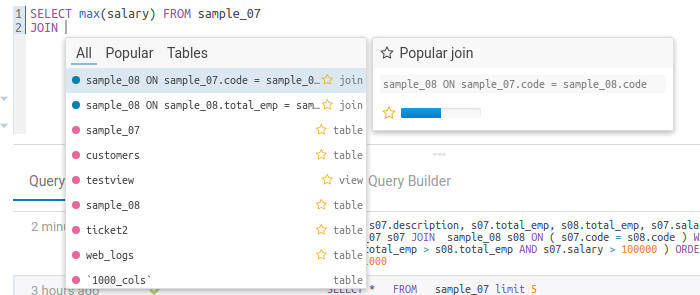

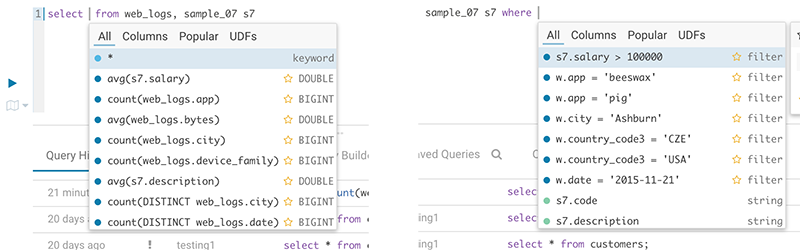

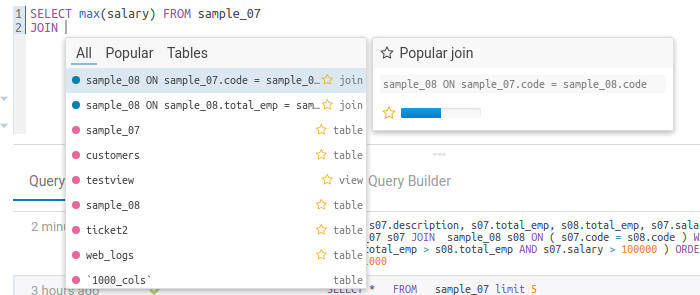

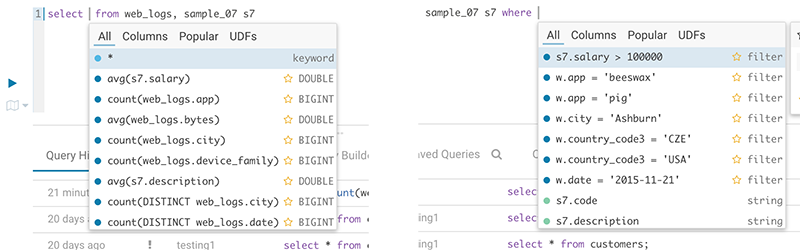

The autocompleter will suggest popular tables, columns, filters, joins, group by, order by etc. based on metadata from Navigator Optimizer. A new “Popular” tab has been added to the autocomplete result dropdown which will be shown when there are popular suggestions available.

This is particularly useful for doing joins on unknown datasets or getting the most interesting columns of tables with hundreds of them.

**Risk alerts**

While editing, Hue will run your queries through Navigator Optimizer in the background to identify potential risks that could affect the performance of your query. If a risk is identified an exclamation mark is shown above the query editor and suggestions on how to improve it is displayed in the lower part of the right assistant panel.

#### During execution

The Query Browser details the plan of the query and the bottle necks. When detected, "Health" risks are listed with suggestions on how to fix them.

#### Post-query execution

A new experimental panel when enabled can offer post risk analysis and recommendation on how to tweak the query for better speed.

### Presentation Mode

Turns a list of semi-colon separated queries into an interactive presentation by clicking on the 'Dashboard' icon. It is great for doing demos or reporting.

### Scheduling

Scheduling of queries is currently done via Apache Oozie and will be open to other schedulers with [HUE-3797](https://issues.cloudera.org/browse/HUE-3797).

## Databases & Datawarehouses

### List

Use the Editor or Dashboard to query [any database or datawarehouse](/administrator/configuration/connectors/). Those databases currently need to be first configured by the administrator.

### Autocompletes & Connectors

Also read about building some [better autocompletes](/developer/parsers/) or extending the connectors with SQL Alchemy, JDBC or building your own [connectors](/developer/sdk).

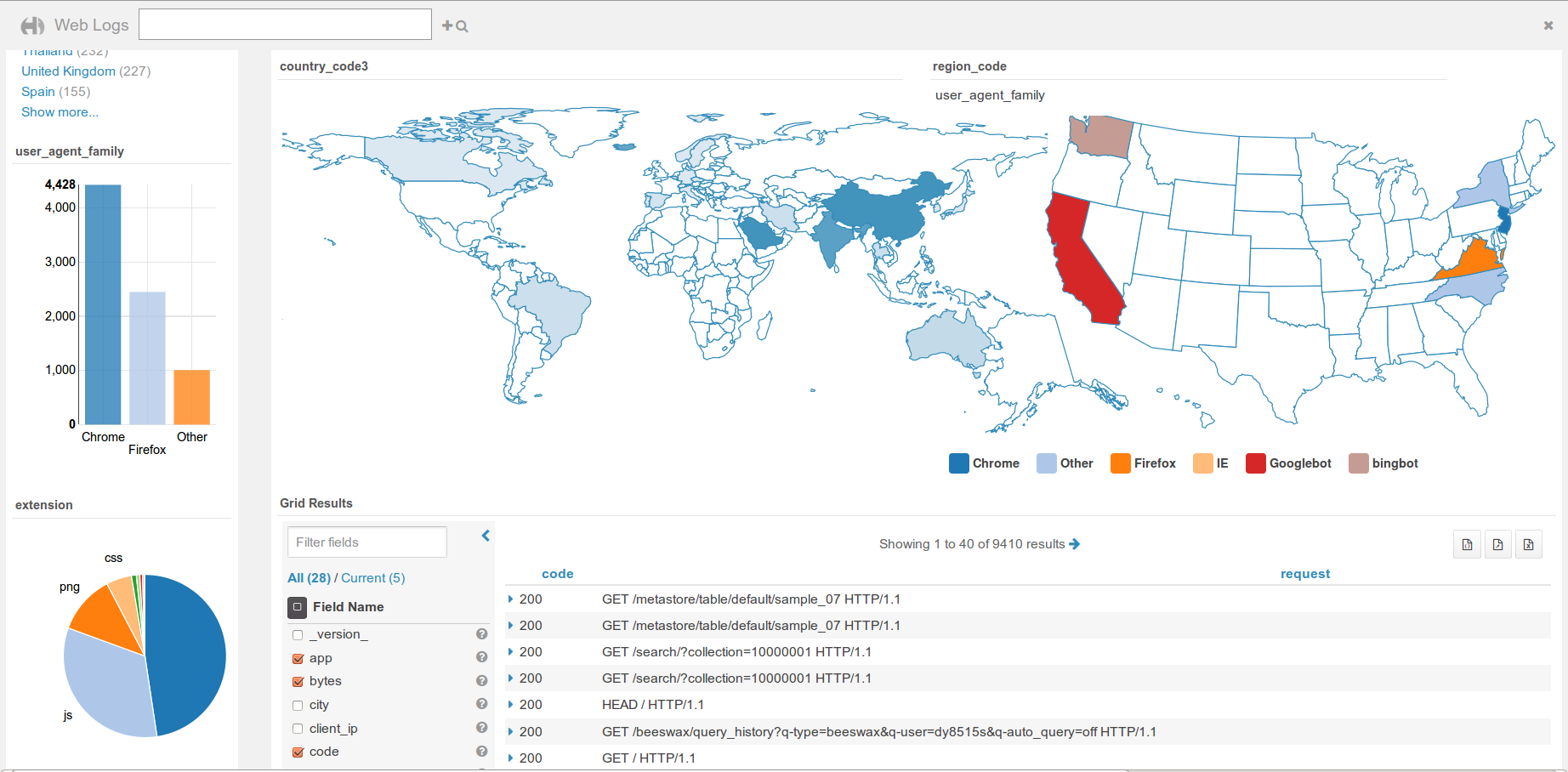

## Dashboards

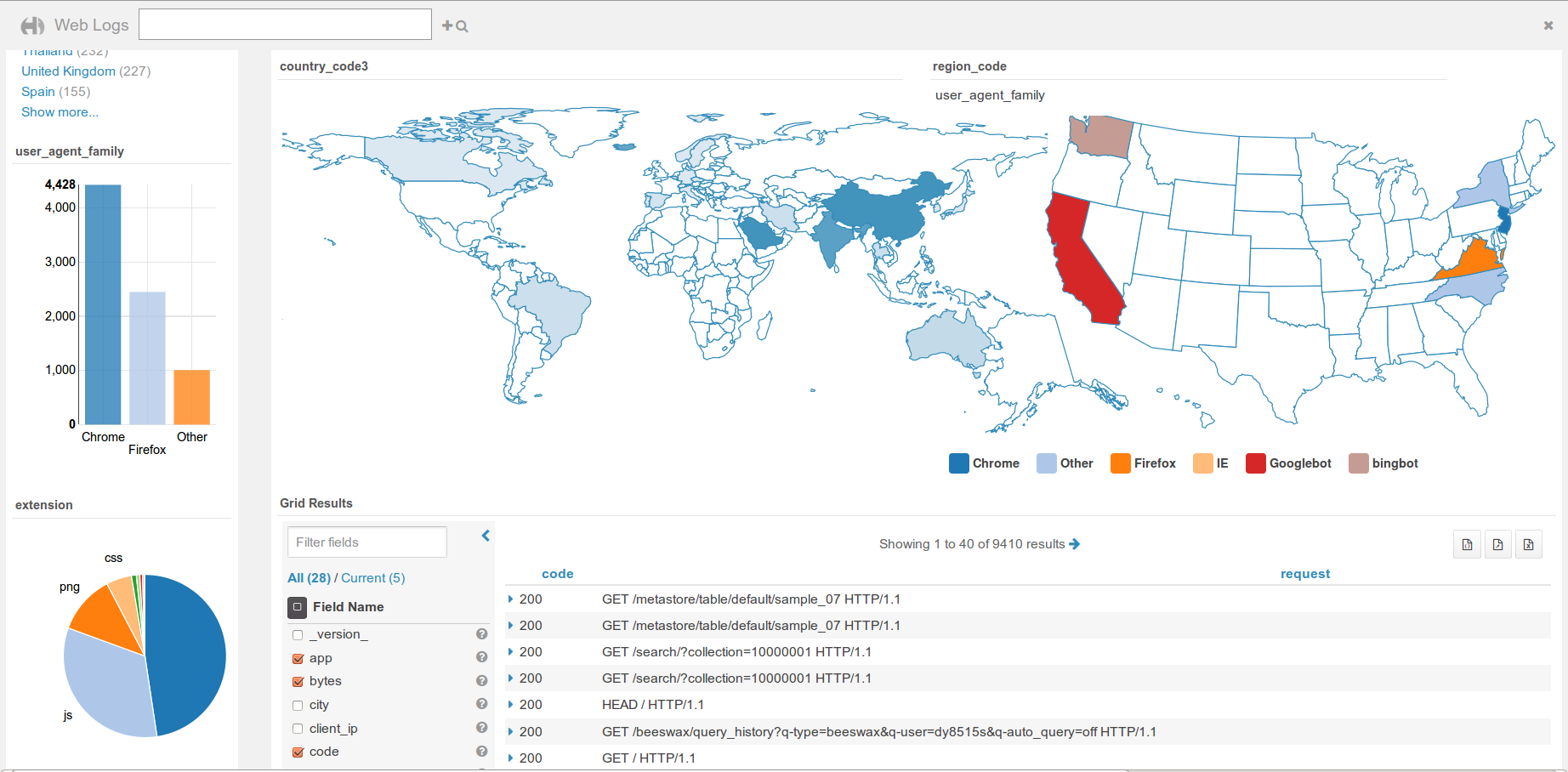

Dashboards are an interactive way to explore your SQL or Solr data quickly and easily. No programming is required and the analysis is done by drag & drops and clicks.

Simply drag & drop widgets that are interconnected together. This is great for exploring new datasets or monitoring without having to type.

Currently supported databases are Apache Solr, Apache Hive and Apache Impala. To add [more databases](/user/querying/#databases-datawarehouses), feel free to check the [SDK](/developer/sdk/).

Tutorials

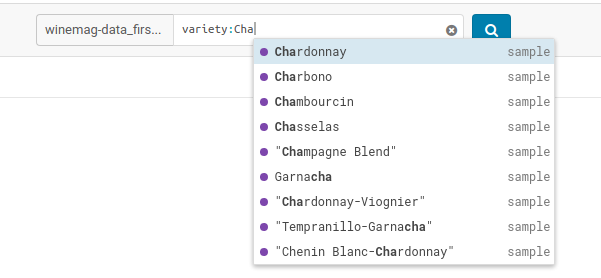

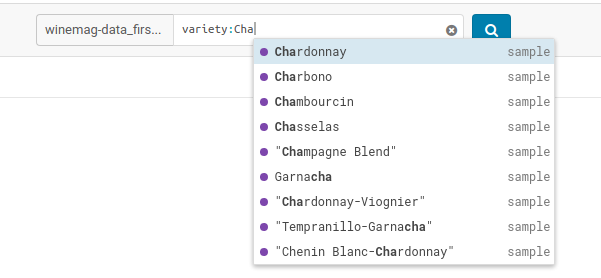

* The top search bar offers a [full autocomplete](http://gethue.com/intuitively-discovering-and-exploring-a-wine-dataset-with-the-dynamic-dashboards/) on all the values of the index

* Comprehensive demo is available on the [BikeShare data visualization post](http://gethue.com/bay-area-bikeshare-data-analysis-with-search-and-spark-notebook/).

### Analytics facets

Drill down the dimensions of the datasets and apply aggregates functions on top of it:

Some facets can be nested:

### Autocomplete

The top bar support faceted and free word text search, with autocompletion.

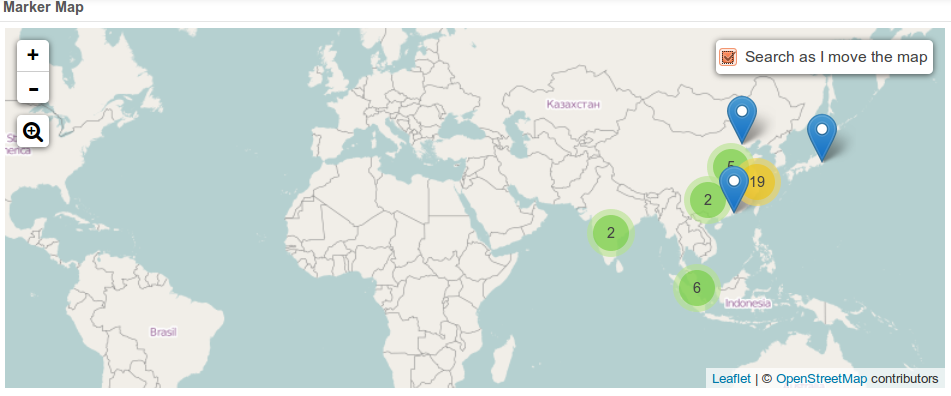

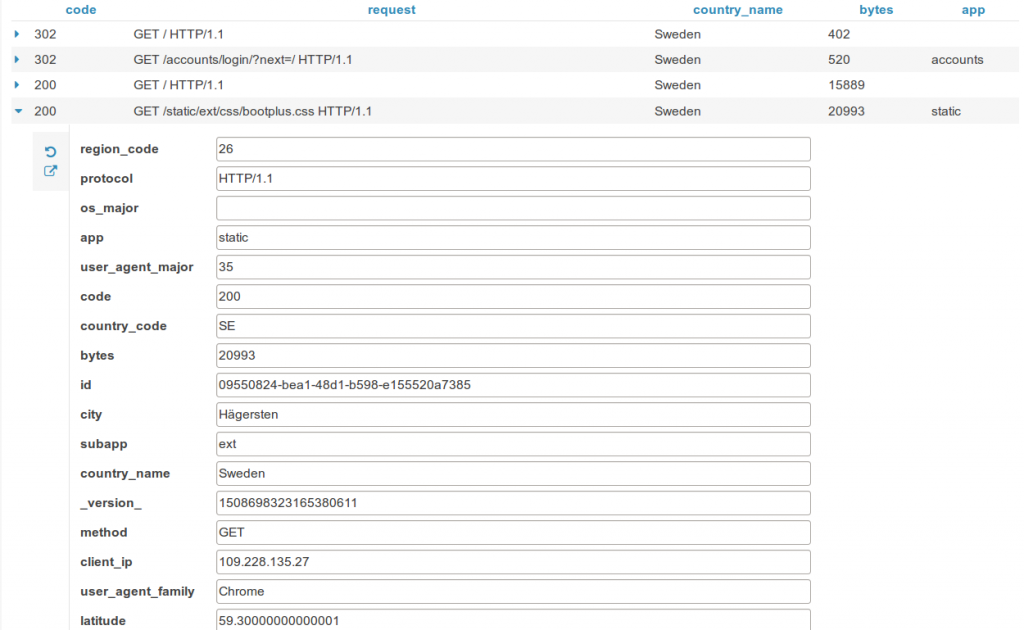

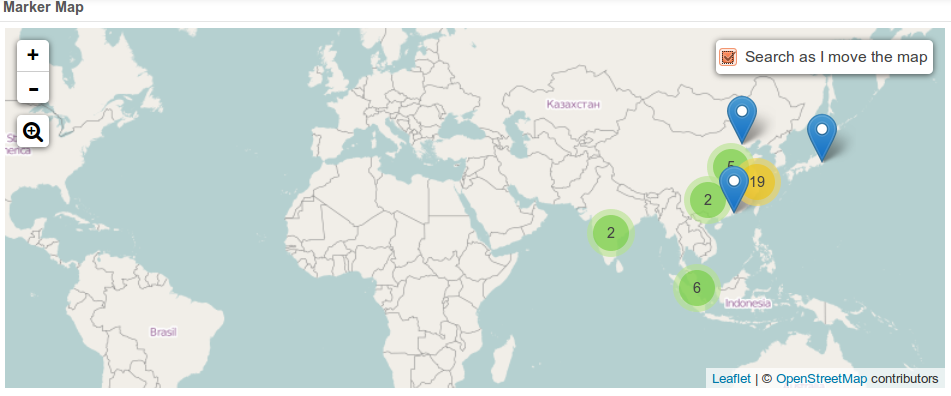

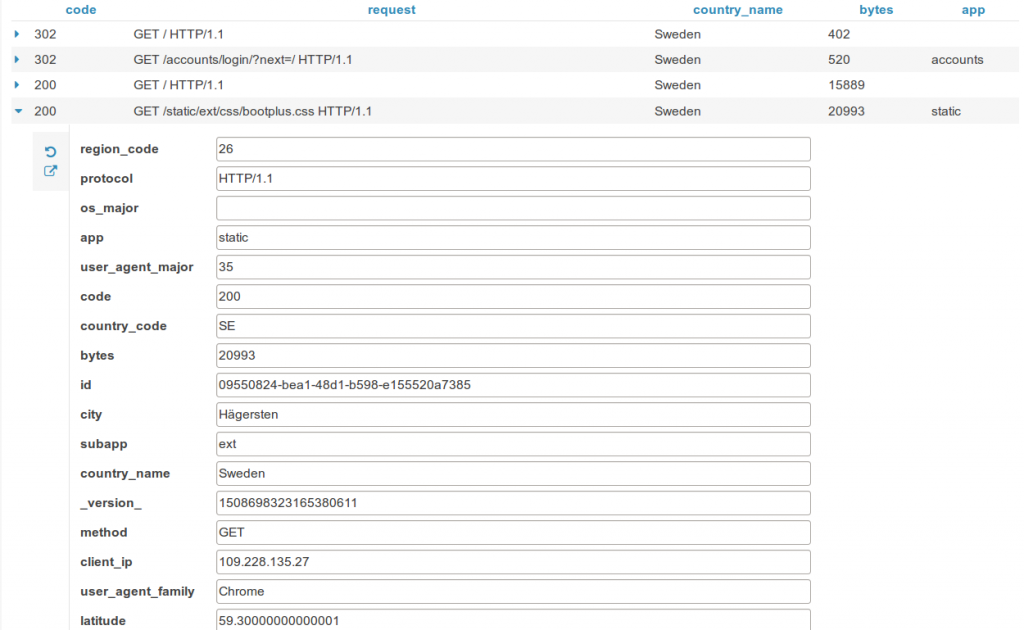

### Marker Map

Points close to each other are grouped together and will expand when zooming-in. A Yelp-like search filtering experience can also be created by checking the box.

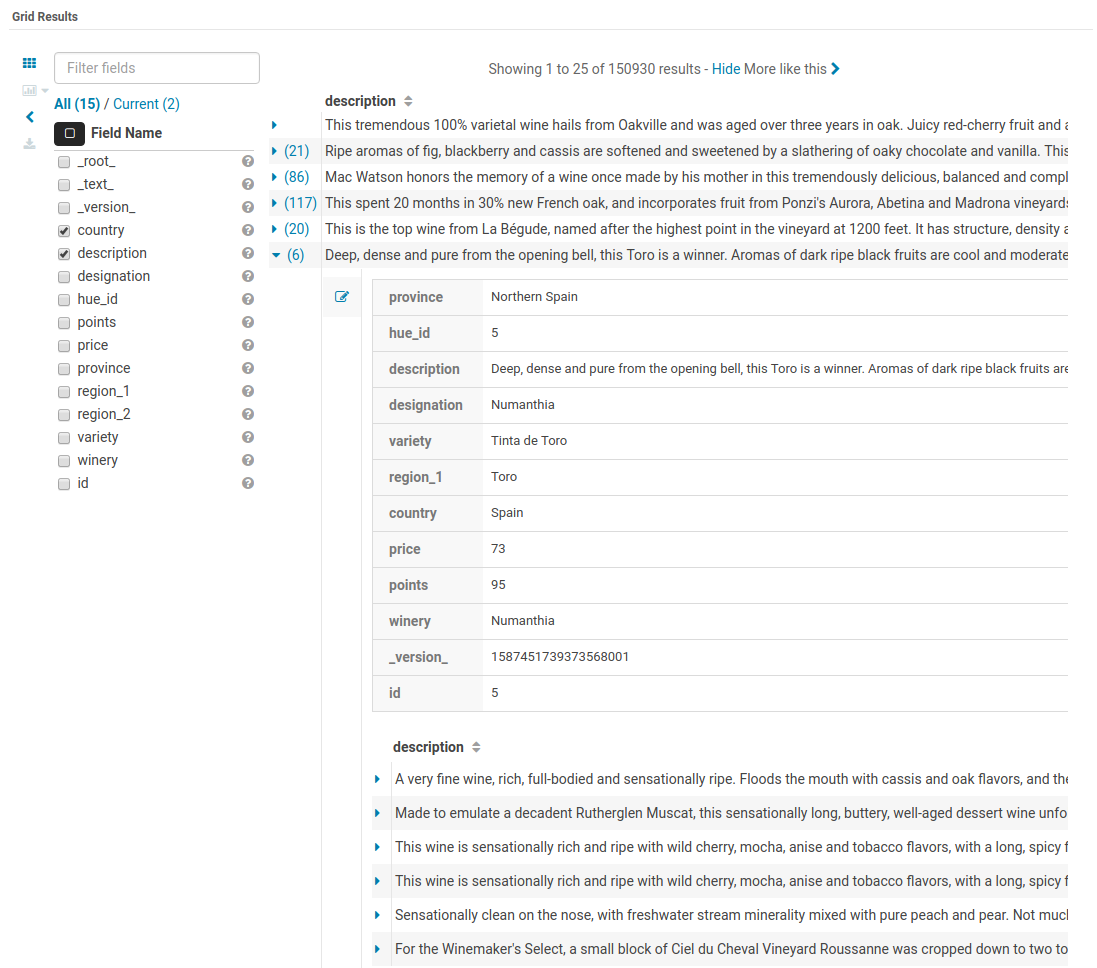

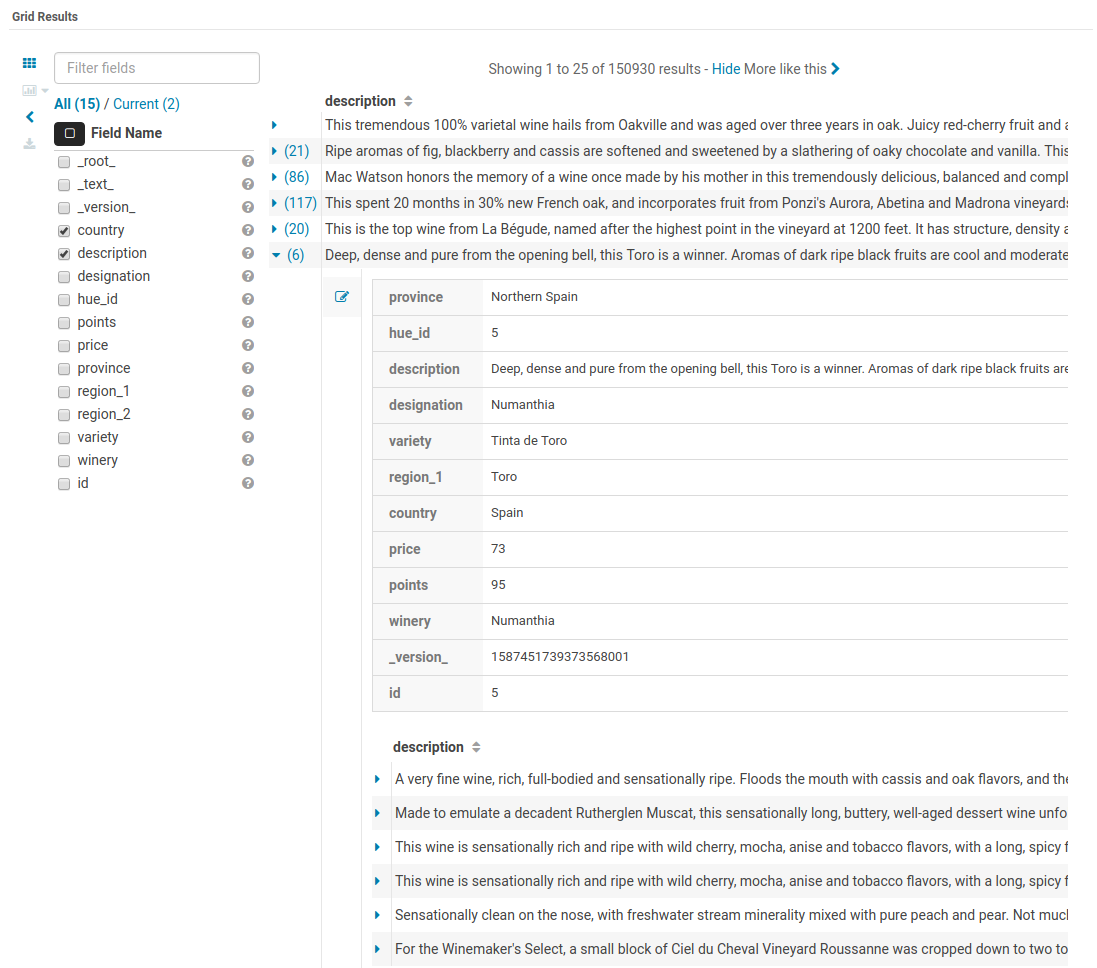

### Edit records

Indexed records can be directly edited in the Grid or HTML widgets by admins.

### Link to original documents

Links to the original documents can also be inserted. Add to the record a field named ‘link-meta’ that contains some json describing the URL or address of a table or file that can be open in the HBase Browser, Metastore App or File Browser:

Any link

{'type': 'link', 'link': 'gethue.com'}

HBase Browser

{'type': 'hbase', 'table': 'document_demo', 'row_key': '20150527'}

{'type': 'hbase', 'table': 'document_demo', 'row_key': '20150527', 'fam': 'f1'}

{'type': 'hbase', 'table': 'document_demo', 'row_key': '20150527', 'fam': 'f1', 'col': 'c1'}

File Browser

{'type': 'hdfs', 'path': '/data/hue/file.txt'}

Table Catalog

{'type': 'hive', 'database': 'default', 'table': 'sample_07'}

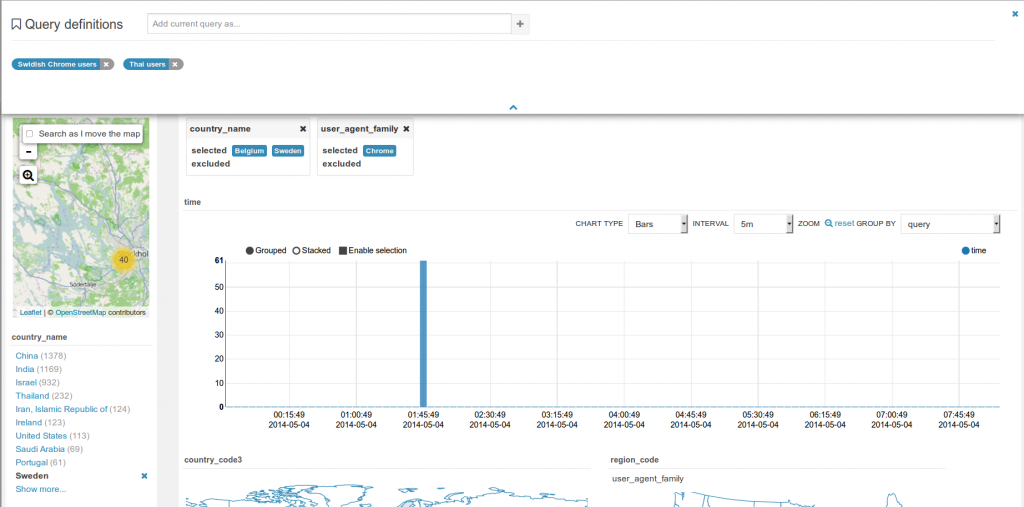

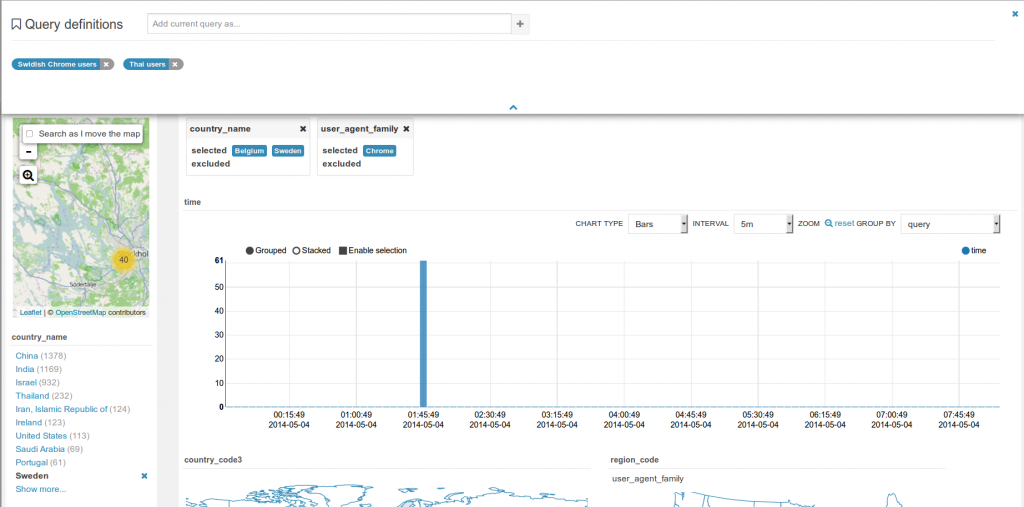

### Save queries

Current selected facets and filters, query strings can be saved with a name within the dashboard. These are useful for defining “cohorts” or pre-selection of records and quickly reloading them.

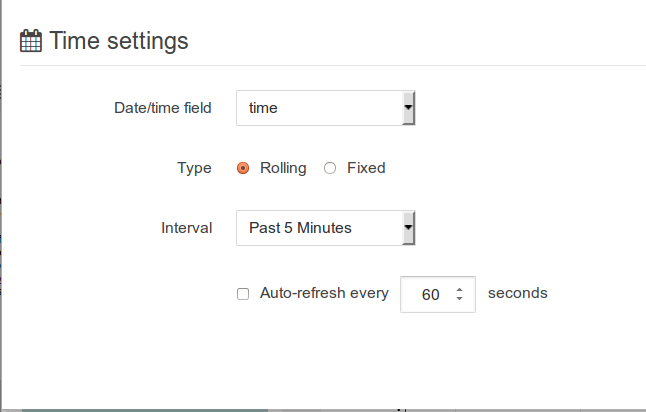

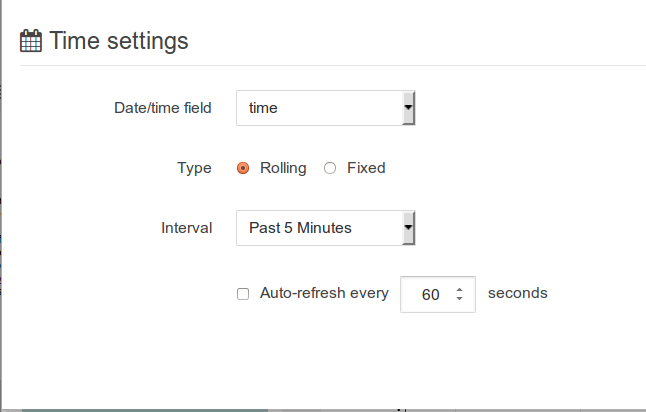

### ‘Fixed’ or ‘rolling’ time window

Real time indexing can now shine with the rolling window filter and the automatic refresh of the dashboard every N seconds. See it in action in the real time Twitter indexing with Spark streaming post.

### 'More like this'

This feature lets you selected fields you would like to use to find similar records. This is a great way to find similar issues, customers, people... with regard to a list of attributes.

## Jobs

In addition to SQL queries, the Editor application enables you to create and submit batch jobs to the cluster.

### Spark

#### Interactive

Hue relies on [Livy](http://livy.io/) for the interactive Scala, Python and R snippets.

Livy got initially developed in the Hue project but got a lot of traction and was moved to its own project on livy.io. Here is a tutorial on how to use a notebook to perform some Bike Data analysis.

Read more about it:

* [How to use the Livy Spark REST Job Server API for doing some interactive Spark with curl](http://gethue.com/how-to-use-the-livy-spark-rest-job-server-for-interactive-spark-2-2/)

* [How to use the Livy Spark REST Job Server API for submitting batch jar, Python and Streaming Jobs](http://gethue.com/how-to-use-the-livy-spark-rest-job-server-api-for-submitting-batch-jar-python-and-streaming-spark-jobs/)

Make sure that the Notebook and interpreters are set in the hue.ini, and Livy is up and running:

[spark]

# Host address of the Livy Server.

livy_server_host=localhost

[notebook]

show_notebooks=true

[[interpreters]]

[[[hive]]]

name=Hive

interface=hiveserver2

[[[spark]]]

name=Scala

interface=livy

[[[pyspark]]]

name=PySpark

interface=livy

#### Batch

This is a quick way to submit any Jar or Python jar/script to a cluster via the Scheduler or Editor.

How to run Spark jobs with Spark on YARN? This often requires trial and error in order to make it work.

Hue is leveraging Apache Oozie to submit the jobs. It focuses on the yarn-client mode, as Oozie is already running the spark-summit command in a MapReduce2 task in the cluster. You can read more about the Spark modes here.

[Here is how to get started successfully](http://gethue.com/how-to-schedule-spark-jobs-with-spark-on-yarn-and-oozie/).

And how to use the [Spark Action](http://gethue.com/use-the-spark-action-in-oozie/).

### Pig

Type [Apache Pig](https://pig.apache.org/) Latin instructions to load/merge data to perform ETL or Analytics.

### Sqoop

Run an SQL import from a traditional relational database via an [Apache Sqoop](https://sqoop.apache.org/) command.

### Shell

Type or specify a path to a regular shell script.

[Read more about it here](http://gethue.com/use-the-shell-action-in-oozie/).

### Java

A Java job design consists of a main class written in Java.

**Sub-queries, correlated or not**

When editing subqueries it will only make suggestions within the scope of the subquery. For correlated subqueries the outside tables are also taken into account.

**Context popup**

Right click on any fragement of the queries (e.g. a table name) to gets all its metadata information. This is a handy shortcut to get more description or check what types of values are contained in the table or columns.

It’s quite handy to be able to look at column samples while writing a query to see what type of values you can expect. Hue now has the ability to perform some operations on the sample data, you can now view distinct values as well as min and max values. Expect to see more operations in coming releases.

**Syntax checker**

A little red underline will display the incorrect syntax so that the query can be fixed before submitting. A right click offers suggestions.

**Advanced Settings**

The live autocompletion is fine-tuned for a better experience advanced settings an be accessed via `CTRL +` , (or on Mac `CMD + ,`) or clicking on the '?' icon.

The autocompleter talks to the backend to get data for tables and databases etc and caches it to keep it quick. Clicking on the refresh icon in the left assist will clear the cache. This can be useful if a new table was created outside of Hue and is not yet showing-up (Hue will regularly clear his cache to automatically pick-up metadata changes done outside of Hue).

### Sharing

Any query can be shared with permissions, as detailed in the [concepts](/user/concepts).

### Language reference

You can find the Language Reference in the right assist panel. The right panel itself has a new look with icons on the left hand side and can be minimised by clicking the active icon.

The filter input on top will only filter on the topic titles in this initial version. Below is an example on how to find documentation about joins in select statements.

While editing a statement there’s a quicker way to find the language reference for the current statement type, just right-click the first word and the reference appears in a popover below:

### Variables

Variables are used to easily configure parameters in a query. They are ideal for saving reports that can be shared or executed repetitively:

**Single Valued**

select * from web_logs where country_code = "${country_code}"

**The variable can have a default value**

select * from web_logs where country_code = "${country_code=US}"

**Multi Valued**

select * from web_logs where country_code = "${country_code=CA, FR, US}"

**In addition, the displayed text for multi valued variables can be changed**

select * from web_logs where country_code = "${country_code=CA(Canada), FR(France), US(United States)}"

**For values that are not textual, omit the quotes.**

select * from boolean_table where boolean_column = ${boolean_column}

### Charting

These visualizations are convenient for plotting chronological data or when subsets of rows have the same attribute: they will be stacked together.

* Pie

* Bar/Line with pivot

* Timeline

* Scattered plot

* Maps (Marker and Gradient)

### Notebook mode

Snippets of different dialects can be added into a single page:

### Dark mode

Initially this mode is limited to the actual editor area and we’re considering extending this to cover all of Hue.

To toggle the dark mode you can either press `Ctrl-Alt-T` or `Command-Option-T` on Mac while the editor has focus. Alternatively you can control this through the settings menu which is shown by pressing `Ctrl-`, or `Command-`, on Mac.

### Query troubleshooting

#### Pre-query execution

**Popular values**

The autocompleter will suggest popular tables, columns, filters, joins, group by, order by etc. based on metadata from Navigator Optimizer. A new “Popular” tab has been added to the autocomplete result dropdown which will be shown when there are popular suggestions available.

This is particularly useful for doing joins on unknown datasets or getting the most interesting columns of tables with hundreds of them.

**Risk alerts**

While editing, Hue will run your queries through Navigator Optimizer in the background to identify potential risks that could affect the performance of your query. If a risk is identified an exclamation mark is shown above the query editor and suggestions on how to improve it is displayed in the lower part of the right assistant panel.

#### During execution

The Query Browser details the plan of the query and the bottle necks. When detected, "Health" risks are listed with suggestions on how to fix them.

#### Post-query execution

A new experimental panel when enabled can offer post risk analysis and recommendation on how to tweak the query for better speed.

### Presentation Mode

Turns a list of semi-colon separated queries into an interactive presentation by clicking on the 'Dashboard' icon. It is great for doing demos or reporting.

### Scheduling

Scheduling of queries is currently done via Apache Oozie and will be open to other schedulers with [HUE-3797](https://issues.cloudera.org/browse/HUE-3797).

## Databases & Datawarehouses

### List

Use the Editor or Dashboard to query [any database or datawarehouse](/administrator/configuration/connectors/). Those databases currently need to be first configured by the administrator.

### Autocompletes & Connectors

Also read about building some [better autocompletes](/developer/parsers/) or extending the connectors with SQL Alchemy, JDBC or building your own [connectors](/developer/sdk).

## Dashboards

Dashboards are an interactive way to explore your SQL or Solr data quickly and easily. No programming is required and the analysis is done by drag & drops and clicks.

Simply drag & drop widgets that are interconnected together. This is great for exploring new datasets or monitoring without having to type.

Currently supported databases are Apache Solr, Apache Hive and Apache Impala. To add [more databases](/user/querying/#databases-datawarehouses), feel free to check the [SDK](/developer/sdk/).

Tutorials

* The top search bar offers a [full autocomplete](http://gethue.com/intuitively-discovering-and-exploring-a-wine-dataset-with-the-dynamic-dashboards/) on all the values of the index

* Comprehensive demo is available on the [BikeShare data visualization post](http://gethue.com/bay-area-bikeshare-data-analysis-with-search-and-spark-notebook/).

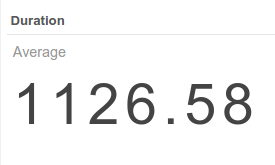

### Analytics facets

Drill down the dimensions of the datasets and apply aggregates functions on top of it:

Some facets can be nested:

### Autocomplete

The top bar support faceted and free word text search, with autocompletion.

### Marker Map

Points close to each other are grouped together and will expand when zooming-in. A Yelp-like search filtering experience can also be created by checking the box.

### Edit records

Indexed records can be directly edited in the Grid or HTML widgets by admins.

### Link to original documents

Links to the original documents can also be inserted. Add to the record a field named ‘link-meta’ that contains some json describing the URL or address of a table or file that can be open in the HBase Browser, Metastore App or File Browser:

Any link

{'type': 'link', 'link': 'gethue.com'}

HBase Browser

{'type': 'hbase', 'table': 'document_demo', 'row_key': '20150527'}

{'type': 'hbase', 'table': 'document_demo', 'row_key': '20150527', 'fam': 'f1'}

{'type': 'hbase', 'table': 'document_demo', 'row_key': '20150527', 'fam': 'f1', 'col': 'c1'}

File Browser

{'type': 'hdfs', 'path': '/data/hue/file.txt'}

Table Catalog

{'type': 'hive', 'database': 'default', 'table': 'sample_07'}

### Save queries

Current selected facets and filters, query strings can be saved with a name within the dashboard. These are useful for defining “cohorts” or pre-selection of records and quickly reloading them.

### ‘Fixed’ or ‘rolling’ time window

Real time indexing can now shine with the rolling window filter and the automatic refresh of the dashboard every N seconds. See it in action in the real time Twitter indexing with Spark streaming post.

### 'More like this'

This feature lets you selected fields you would like to use to find similar records. This is a great way to find similar issues, customers, people... with regard to a list of attributes.

## Jobs

In addition to SQL queries, the Editor application enables you to create and submit batch jobs to the cluster.

### Spark

#### Interactive

Hue relies on [Livy](http://livy.io/) for the interactive Scala, Python and R snippets.

Livy got initially developed in the Hue project but got a lot of traction and was moved to its own project on livy.io. Here is a tutorial on how to use a notebook to perform some Bike Data analysis.

Read more about it:

* [How to use the Livy Spark REST Job Server API for doing some interactive Spark with curl](http://gethue.com/how-to-use-the-livy-spark-rest-job-server-for-interactive-spark-2-2/)

* [How to use the Livy Spark REST Job Server API for submitting batch jar, Python and Streaming Jobs](http://gethue.com/how-to-use-the-livy-spark-rest-job-server-api-for-submitting-batch-jar-python-and-streaming-spark-jobs/)

Make sure that the Notebook and interpreters are set in the hue.ini, and Livy is up and running:

[spark]

# Host address of the Livy Server.

livy_server_host=localhost

[notebook]

show_notebooks=true

[[interpreters]]

[[[hive]]]

name=Hive

interface=hiveserver2

[[[spark]]]

name=Scala

interface=livy

[[[pyspark]]]

name=PySpark

interface=livy

#### Batch

This is a quick way to submit any Jar or Python jar/script to a cluster via the Scheduler or Editor.

How to run Spark jobs with Spark on YARN? This often requires trial and error in order to make it work.

Hue is leveraging Apache Oozie to submit the jobs. It focuses on the yarn-client mode, as Oozie is already running the spark-summit command in a MapReduce2 task in the cluster. You can read more about the Spark modes here.

[Here is how to get started successfully](http://gethue.com/how-to-schedule-spark-jobs-with-spark-on-yarn-and-oozie/).

And how to use the [Spark Action](http://gethue.com/use-the-spark-action-in-oozie/).

### Pig

Type [Apache Pig](https://pig.apache.org/) Latin instructions to load/merge data to perform ETL or Analytics.

### Sqoop

Run an SQL import from a traditional relational database via an [Apache Sqoop](https://sqoop.apache.org/) command.

### Shell

Type or specify a path to a regular shell script.

[Read more about it here](http://gethue.com/use-the-shell-action-in-oozie/).

### Java

A Java job design consists of a main class written in Java.

| Jar path | The fully-qualified path to a JAR file containing the main class. |

| Main class | The main class to invoke the program. |

| Args | The arguments to pass to the main class. |

| Java opts | The options to pass to the JVM. |