The latest Hue is improving the user experience and will provide an even simpler solution in Hue 4.

If you have any questions, feel free to comment here or on the hue-user list or @gethue!

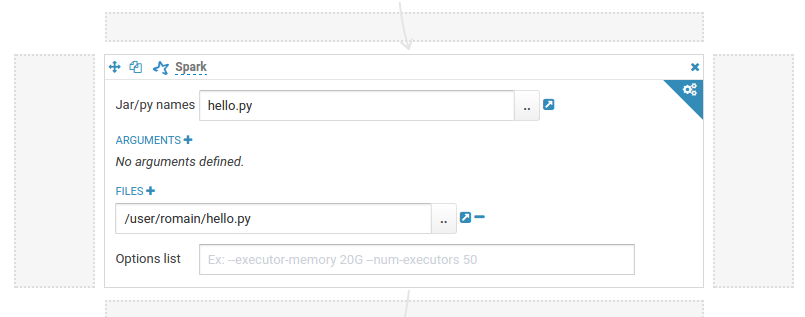

][3]

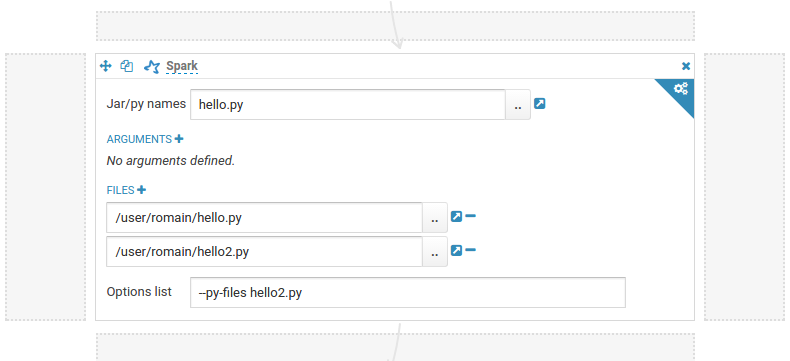

Script with a dependency on another script (e.g. hello imports hello2).

[

][3]

Script with a dependency on another script (e.g. hello imports hello2).

[ ][4]

For more complex dependencies, like Panda, have a look at this [documentation][5].

## Jars (Java or Scala)

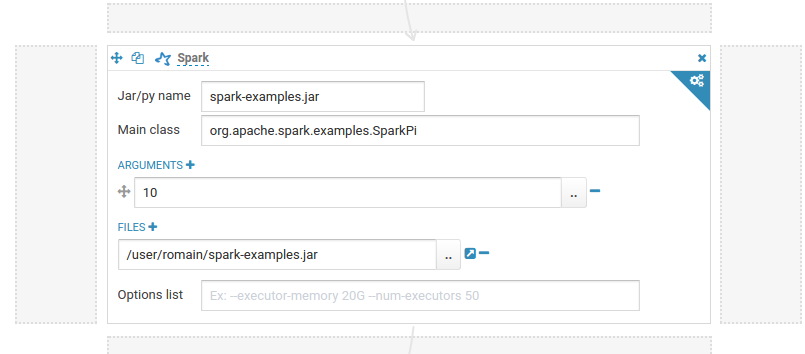

Add the jars as File dependency and specify the name of the main jar:

[

][4]

For more complex dependencies, like Panda, have a look at this [documentation][5].

## Jars (Java or Scala)

Add the jars as File dependency and specify the name of the main jar:

[ ][6]

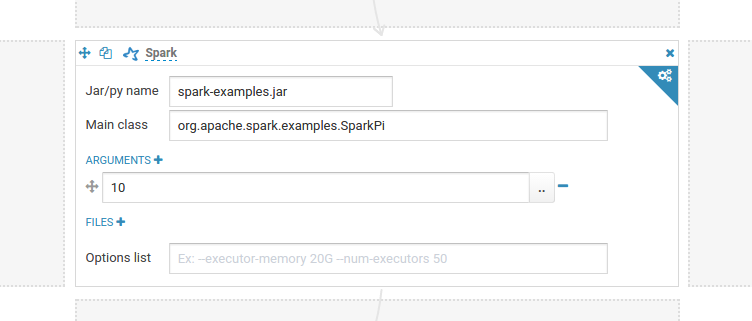

Another solution is to put your jars in the 'lib' directory in the workspace ('Folder' icon on the top right of the editor).

[

][6]

Another solution is to put your jars in the 'lib' directory in the workspace ('Folder' icon on the top right of the editor).

[ ][7]

[1]: http://oozie.apache.org/

[2]: https://gethue.com/use-the-spark-action-in-oozie/

[3]: https://cdn.gethue.com/uploads/2016/08/oozie-pyspark-simple.png

[4]: https://cdn.gethue.com/uploads/2016/08/oozie-pyspark-dependencies.png

[5]: http://www.cloudera.com/documentation/enterprise/latest/topics/spark_python.html

[6]: https://cdn.gethue.com/uploads/2016/08/spark-action-jar.png

[7]: https://cdn.gethue.com/uploads/2016/08/oozie-spark-lib2.png

][7]

[1]: http://oozie.apache.org/

[2]: https://gethue.com/use-the-spark-action-in-oozie/

[3]: https://cdn.gethue.com/uploads/2016/08/oozie-pyspark-simple.png

[4]: https://cdn.gethue.com/uploads/2016/08/oozie-pyspark-dependencies.png

[5]: http://www.cloudera.com/documentation/enterprise/latest/topics/spark_python.html

[6]: https://cdn.gethue.com/uploads/2016/08/spark-action-jar.png

[7]: https://cdn.gethue.com/uploads/2016/08/oozie-spark-lib2.png